By Gareth Evenson

Overview

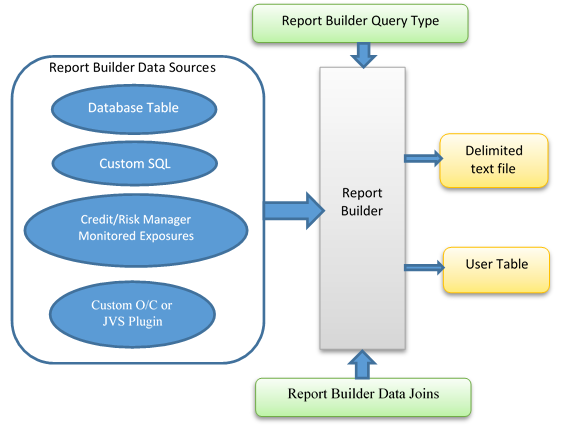

A recent project was undertaken for a banking client to create a data warehouse interface from Findur as part of a new system implementation. The interface development was undertaken using a combination of report builder to generate delimited and a post process task to create control information including file information and check-sums. This approach also supports the roadmap migration of the interface to a direct injection of data to user tables for loading into the external warehouse with no additional development and minimal system configuration.

This document provides an overview of the use of Report Builder as a tool for implementing the data interface, rather than details of the interface itself.

Report Builder Architecture

The report builder tool provides a simple method of generating extracts from Findur in a number of formats with minimal (often zero) development. The report builder architecture combines a set of components and output types as illustrated below:

These components are described below:

- Report Builder – Findur/Endur report production and generation application

- Data Sources – data input components providing inputs to the report process, which include: Endur/Findur database tables and views, custom SQL saved in text files, custom plug-ins developed in JVS or Open Components, etc.

- Query Types – the primary category of report developed, which are categories such as transactions, indices, credit risk exposure definitions plus other data sources. There data selection associated with these is defined by saved queries or the full output of the given data source.

- Data Joins: SQL like join criteria to link the selected data sources.

- Output types/formats: text files, XL sheets, DB table insert, XML files, etc.

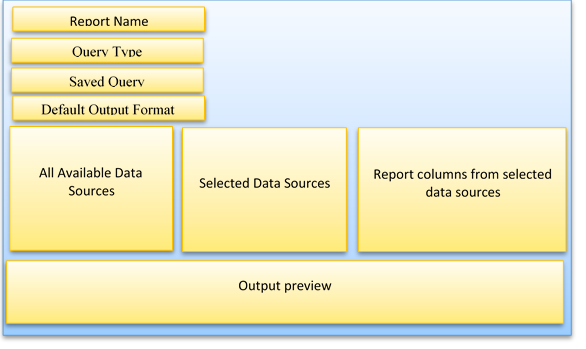

The following diagram illustrates the report builder in main window with the various panels showing the some of the components listed above:

Data Selection Query Types

The report Query Type is the master criteria for defining the report or primary data selection criteria. Once selected the “Selected Data Sources” list is populated with an initial entry based on the query type e.g. Ab Tran for Transactions.

The following table shows the full set of query types available:

| Query Types | Comment |

| Data Source – Auto Match | Pre-defined report data source |

| Data Source – Report Builder Report | Pre-defined report data source |

| Data Source – Table Snapshot | Pre-defined report data source |

| Data Source – CSV No Header | Pre-defined report data source |

| Data Source – XML | Pre-defined report data source |

| Data Source – CSV | Pre-defined report data source |

| Data Source – Custom SQL | Pre-defined report data source |

| Data Source – Plugin | Pre-defined report data source |

| Database Table | |

| Transactions | |

| Party | |

| Party Account | |

| Settlement Instruction | |

| Party Agreement Document | |

| Personnel | |

| Transaction Events | |

| Netting Agreement | |

| Confirmation | |

| Index | |

| Party Group | |

| Agreements | |

| Corporate Actions | |

| Back Office Documents | |

| Schedule Nominations | |

| Power Delivery | |

| CMotion | |

| CMotion Details | |

| Nerc ETag | |

| Commodity EFP | |

| TPM History | |

| CMotion View | |

| Portfolios | |

| Contract | |

| Accounting Accounts | |

| Accounting Rules | |

| Accounting Manual Entries | |

| Accounting Journal Entries | |

| Accounting Balances | |

| Accounting Processing Logs | |

| Accounting Regions | |

| Accounting Periods | |

| Risk Exposures | |

| Credit Exposures | |

| Nostro Account Position | |

| Bank Balances | |

| Bank Transactions | |

| SWIFT Statements | |

| SWIFT Cash Advice | |

| Input Files | |

| SIM Data Warehouse | |

| Auto Match Activity Audit | |

| Transactions – Trading |

The Saved Query provides the primary selection criteria for the report generation, and this list is driven by the Query Type. So if Index is the Query Type then only Market Manager Queries will by listed.

Note that a Saved Query is not always required – for example where the Query Type is a custom plugin then this data item will generate the data selection list.

Once the query type has been selected the “Selected Data Sources” panel will be populated with an initial data source of the appropriate type e.g. if query type “Index” is defined then the Index Definition data source will be selected.

Hint: The report will require that you join all results to this initial data source populated from the saved query. If you wish to create a report which is not driven from a saved query (if this not appropriate), then a plugin data source may be required as the query type. The plugin can then generate the initial data set for creation of the output.

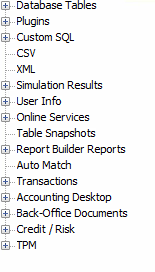

Report Builder Data Sources

Report builder data sources are, as the name suggest, the set of data inputs available for any report. The following data source types are available within the report builder tool. Most of these should be self-explanatory:

The set of data sources used for the data warehouse interface were as follows:

- Database Tables : Native Openlink tables

- Custom SQL: custom SQL statements saved in text files and imported into the Openlink file system. These are useful where complex outer joins and sub-selects are needed for data selection which cannot be defined in the standard table join structure.

- Plugins: Custom plugins developed in C#//OC. These were used in particular for simulation result management where the native report builder interface is too blunt a tool, and also for market data management where the existing data sources are also limited.

- Credit / Risk: Extraction of Credit/Market risk limits and utilisations (note that the risk managers must be running to extract this data)

Creating and Using Data Sources

A number of standard data sources are available out of the box, including access to all native tables within the OpenLink database. Where these are used there is no need to create new data sources.

In the case of Custom SQL or Plugins then a new data source musty be created to expose the sql/plugin to the report builder tool. The process is very similar and the screen shots below show how this is done for native SQL.

For the Data Warehouse interface the SQL was developed in a text editor then the .SQL files were imported into the system via the directory manager, under a new User Branch which I had created:

User / ReportBuilderQuery

The file is imported via the “Import” window.

Once the file is in the file system then from the Report Builder / Advanced tab, the Data Source Editor window is used to create the new data source.

The data source editor data describe button executes the SQL and creates the data definition listed below.

The name of the data source is free form but it is recommended that a standardised naming convention is adopted, for obvious reasons.

Hint: For plugin-ins I personally recommend that the plugin has a default behaviour which creates the output table ad returns this so that the data source can be defined. Although it may be easier to create the output table structure as part of the plug-in execution (via table Select for example), when executed from the Data Source Editor no parameters are passed to the plug-in so often no data is returned.

Data Source Joins

Once data sources are added to a report, they are linked together via joins, which have a SQL-line syntax, example of the join data is shown here:

| Alias | Join Type | Join From | Join To | Join Clause |

| dxr1 | INNER | ab_tran (dxr1) | None | dxr1.tran_num = qr.query_result |

| dxr2 | INNER | parameter (dxr2) | ab_tran (dxr1) | dxr2.ins_num = dxr1.ins_num AND dxr2.currency != dxr1.currency |

| dxr3 | INNER | parameter-1 (dxr3) | ab_tran (dxr1) | dxr3.ins_num = dxr1.ins_num AND dxr3.currency = dxr1.currency |

| dxr5 | INNER | header (dxr5) | ab_tran (dxr1) | dxr5.ins_num = dxr1.ins_num |

| dxr6 | INNER | SQL_FX_Flows (dxr6) | ab_tran (dxr1) | dxr6.tran_num = dxr1.tran_num |

| dxr8 | OUTER | SQL_FX_Tran_Aux_Data (dxr8) | ab_tran (dxr1) | dxr8.tran_group = dxr1.tran_group |

Joins can be aliased (not shown above) to give more descriptive names

The Join Type can be inner / outer / union

The join clause may also include system variables and report parameters which are given the following syntax: $$VAR_NAME$$. For example the join below is using the report parameter SYSTEM_DATE:

| Alias | Join Type | Join From | Join To | Join Clause |

| dxr1 | INNER | acs_account (dxr1) | None | dxr1.acs_account_id = qr.query_result |

| dxr4 | INNER | acs_balance_details (dxr4) | acs_balance_header (dxr5) | dxr4.acs_balance_hdr_id = dxr5.acs_balance_hdr_id AND abs(dxr4.acs_balance) > 0.001 AND dxr4.acs_balance_date = ‘$$SYSTEM_DATE$$’ |

| dxr5 | INNER | acs_balance_header (dxr5) | acs_account (dxr1) | dxr5.acs_account_id = dxr1.acs_account_id |

I emphasise this last point because the set of intrinsic parameters is limited and not well documented. So the use of report parameters for join criteria and also for output report naming is common and was used often.

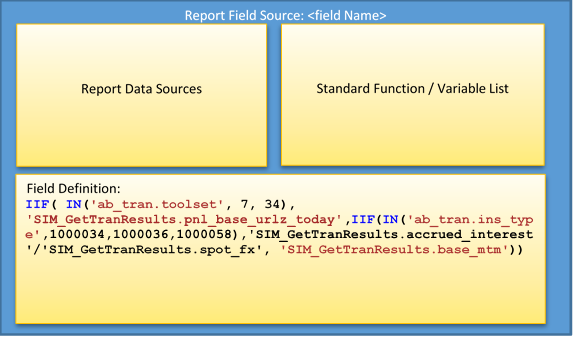

Report Field Definition

Once the data source has been added to the report then the fields within the data source are added to the report by drag and drop.

Fields can be reordered on the report by the use of the up and down arrows (and I recommend thus rather than try and drag a field to a particular place on the report).

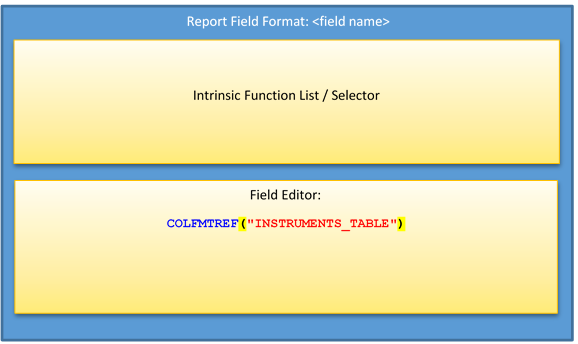

Once on the report the field definition can be viewed and edited. The field editor window layout is shown below. There are three windows:

- The report data sources. These can be expanded and other fields added by double-click on the field name.

- Functions: Set of intrinsic functions that can be used – example below shows use of the IIF() conditional statement.

- Field definition.

Field format and aggregation etc, can also be driven from the report builder main window. An example is shown below. Note the distinction between the column name and title. Extraction to database tables uses the name not the title, for example.

The report output field window has the following structure:

| Column Title | Source Type | Output Type | Sort Order | Sort Direction | Justifi cation | Aggre gation | Sub Total | Grand Total | Include In Output | Column Name |

| deal_id | Integer | Integer | 1 | Ascend Numeric | Left | None | None | None | Include | deal_tracking_num |

| deal_leg | Integer | Integer | 2 | Ascend Numeric | Left | None | None | None | Include | deal_leg |

| tfi_id | Integer | Integer | None | Left | None | None | None | Include | ins_num | |

| ins_type | Integer | Integer | None | Left | None | None | None | Include | ins_type | |

| portfolio | Integer | Integer | None | Left | None | None | None | Include | internal_portfolio | |

| proj_idx | Integer | Integer | None | Left | None | None | None | Include | proj_idx | |

| disc_idx | Integer | Integer | None | Left | None | None | None | Include | disc_idx | |

| currency_id | Integer | Integer | None | Left | None | None | None | Include | currency_id | |

| base_currency | String | String | None | Left | None | None | None | Include | base_currency | |

| mtm | Double | Double | None | Right | None | None | None | Include | mtm |

Data aggregation wasn’t used in this interface and won’t be discussed further in this document.

Intrinsic Functions

There are a set of intrinsic functions which can be accessed from the field editor shown above. The set of function criteria are as follows:

- Comparison

- Conversion

- Date/Time

- Debugging

- Format

- Lookup

- Math

- Miscellaneous

- Query

- SQL

- String

An example of an expanded list of functions is shown here:

| Name | Description | Syntax | |

| Comparison | |||

| BETWEEN | If compare value is between two test values | BETWEEN( <compare value> , <test value 1>, <test value 2> ) | |

| IIF | If/Else statement | IIF( <condition> , <value if true>, <value if false> ) | |

| IN | If compare value is in the list of test values | IN( <compare value> , <test value 1>, <test value 2> ) | |

| ISBLANK | If the string value is empty or all white space | ISBLANK( <string value> ) | |

A function can be added to the field definition window via double-click on the function name. This can then be filled out by adding fields from the data sources.

Field Formatting

Once fields are defined the format of the field can then be modified as required and a large number of data formatting functions are provided. The screen shot below shows the field formatting window. In this case the column is formatted as a reference field.

Note that the system will attempt a standard format based on the data source meta-data definition, so where for example a field is selected from a table column which has a format defined (ins type for example), this will be applied.

There are two approaches to formatting: Quick Display Format Vs Quick Data Format

For data format this can modify the field type, from number to text for example. This can have implications for data extraction to database tables.

The following table shows some of the formatting functions available:

| Format | |||

| FMTDBDTM | Format a date-time in database format | FMTDBDTM( <value> ) | |

| FMTDBL | Format a double as a string | FMTDBL( <value>, “NOTIONAL”, <width>, <precision> ) | |

| FMTDBL | Format a double as a string | FMTDBL( <value>, “PLAIN”, <width>, <precision> ) | |

| FMTDBL | Format a double as a string | FMTDBL( <value>, “ACCOUNTING”, <width>, <precision> ) | |

| FMTDTM | Format a date/time as a string | FMTDTM( <value>, <format string> ) | |

| FMTINT | Format an integer or long as a string | FMTINT( <value>, “PLAIN”, <width> ) | |

| FMTINT | Format an integer or long as a string | FMTINT( <value>, “NOTIONAL”, <width> ) | |

| FMTINT | Format an integer or long as a string | FMTINT( <value>, “ACCOUNTING”, <width> ) | |

Defining Output Formats

The following output formats are available. Most are self-explanatory.

- Crystal

- CSV

- DB Table

- DMS

- Excel

- Flat (File)

- HTML

- Plugin

- Screen

- Snapshot

- XML

For the data warehouse only CSV (in fact bar delimited) and DB Table were used.

An example of the definition of the output report is shown below:

| Property Name | Property Value | Default Value |

| Report output file name | QTC_PNL_DETAIL_$$BUSINESS_DATE$$.TXT | $$GEN_DATE$$_$$RUN_ID$$.$$OUTPUT_EXT$$ |

| Report output directory | $$OUTPUT_DIR$$ | $$OUTPUT_DIR$$ |

| Database directory | $$DATABASE_DIR$$ | $$DATABASE_DIR$$ |

| Save report to database | No | No |

| Database security | p=rw | p=rw |

| Overwrite file | Yes | No |

| Display report in viewer | No | No |

| Report Data to OP Service | No | No |

| Output Only Sub Total Lines | No | No |

| Quote strings | No | No |

| Field separator | Bar | Comma |

| Suppress Column Names | No | No |

| Deliver report via email | No | No |

The list of possible delimiters as standard is:

- Bar

- Colon

- Comma

- Semi-Colon

- Space

- Tab

Note the use of the $$BUSINESS_DATE$$ variable in the file name. Again this is a report parameter rather than intrinsic variable.

table shows definition of a DB Table extract to a user table:

| Property Name | Property Value | Default Value |

| Table Name | USER_TABLE_NAME | |

| Display Report In Viewer | Yes | No |

| Report Data to OP Service | No | No |

| Output Only Sub-Total Lines | No | No |

| Force New Table | Yes | No |

| Remove All Entries | Yes | No |

| Bulk Upload | No | No |

If the use session has permissions then the report builder can create the user table on the fly.

Defining Parameters and Variables

Parameters to the reports can be defined and defaulted, and as noted above may be created to allow use of the variables in data joins and report names.

These parameters are passed into plug-ins and can then control plug-in execution. For example the REVAL_ADHOC parameter is used to define if an intra-day or EOD sim result is used in the plug-in. Example of the parameter definition is shown here:

| Data Source | Parameter Name | Parameter Value | Prompt User For Value | Value Expression | Parameter Description | Picklist | Parameter Title | Format Expression |

| ALL | BUSINESS_DATE | FALSE | FMTDTM(NOW(“SYSTEM”) , “%Y%m%d”) | |||||

| ALL | REVAL_ADHOC | No | FALSE | NO_YES_TABLE | ||||

| ALL | CONTINUE_ON_ ERROR | Yes | FALSE | NO_YES_TABLE | ||||

| ALL | REVAL_TYPE | EOD | FALSE | REVAL_TYPE_TABLE |

The parameter definition has access to all of the standard function list available for field definition.

Code Management / CMM

Report builder is well integrated to the Configuration Management Module (CMM), and report definitions are easily exported and imported via this interface. There is no report versioning, so regular export of the report definitions is a useful way of managing code baselines and versions. This is also a simple and efficient way to release new code.

Hint: The main downside of CMM is that it has a tendency when managing dependencies in a package to pick up some non-dependent items. Care should be taken with this when moving code as sometimes code which is not part of the direct dependency is migrated and can overwrite the target system (particularly for JVS code projects where only parts are used in the repot). You may want to consider this issue when defining you code project structures.

List of data warehouse elements

The following table provides a full list of the data items extracted for the data warehouse interface:

| No | Logical Grouping | Interface Item | Source | Destination |

| 1 | Account Balances | Call notice Account Balances | Findur | Warehouse |

| 2 | Business Unit hierarchy | Call Notice acct detals | Findur | Warehouse |

| 3 | Business Unit hierarchy | Credit Rating | Findur | Warehouse |

| 4 | Business Unit hierarchy | External Party Details | Findur | Warehouse |

| 5 | Business Unit hierarchy | Internal Party Details | Findur | Warehouse |

| 6 | Business Unit hierarchy | Party Function | Findur | Warehouse |

| 7 | Business Unit hierarchy | Party Info – additional party data | Findur | Warehouse |

| 8 | Business Unit hierarchy | Party Relationship Details | Findur | Warehouse |

| 9 | Business Unit hierarchy | Portfolio | Findur | Warehouse |

| 10 | Call Notice Balances | Call notice Account Balances | Findur | Warehouse |

| 11 | Call notice mothly statement | Call notice account monthly statement extract | Findur | Warehouse |

| 12 | Call Notice Movements | Call notice Account Movements | Findur | Warehouse |

| 13 | Call Notice Rate Details | Call notice account interest rate details | Findur | Warehouse |

| 14 | Cash flow event data | All trade cash flow details | Findur | Warehouse |

| 15 | Credit/Market Risk Exposure | Credit and Risk Exposure Definition Details | Findur | Warehouse |

| 16 | Credit/Market Risk Exposure | Credit Risk Exposure By deal | Findur | Warehouse |

| 17 | Credit/Market Risk Exposure | Credit Risk Exposure By Facility Line | Findur | Warehouse |

| 18 | Credit/Market Risk Exposure | Credit/Market Risk Exposure Criteria | Findur | Warehouse |

| 19 | Credit/Market Risk Exposure | Market Risk Exposure By deal | Findur | Warehouse |

| 20 | Credit/Market Risk Exposure | Market Risk Exposure By Facility Line | Findur | Warehouse |

| 21 | Dealer Quote Data | Trades – Bonds | Findur | Warehouse |

| 22 | EOD Market Data | Curve Definition | Findur | Warehouse |

| 23 | EOD Market Data | Curve Heirarchy (parent link) | Findur | Warehouse |

| 24 | EOD Market Data | Grid Point and Prices – Monthly granularity output | Findur | Warehouse |

| 25 | EOD Market Data | Grid Point and Prices – Pricing grid points only | Findur | Warehouse |

| 26 | EOD Market Data | Market Data Object (MDO) Mappings | Findur | Warehouse |

| 27 | EOD Market Data | Spot FX | Findur | Warehouse |

| 28 | Full set of Trades | Commodity Swap Deal | Findur | Warehouse |

| 29 | Full set of Trades | Commodity Swap Deal Profile | Findur | Warehouse |

| 30 | Full set of Trades | Deal Master – All Deals | Findur | Warehouse |

| 31 | Full set of Trades | Holiday Schedule on Side information | Findur | Warehouse |

| 32 | Full set of Trades | Rate Reset Extract | Findur | Warehouse |

| 33 | Full set of Trades | Security Static – Customised Profiles | Findur | Warehouse |

| 34 | Full set of Trades | Security Static – Interest details | Findur | Warehouse |

| 35 | Full set of Trades | Security Static – Interest details | Findur | Warehouse |

| 36 | Full set of Trades | Security Static – Principal details | Findur | Warehouse |

| 37 | Full set of Trades | Security Static – Principal details | Findur | Warehouse |

| 38 | Full set of Trades | Security Static – Repricing details | Findur | Warehouse |

| 39 | Full set of Trades | Security Static – Repricing details | Findur | Warehouse |

| 40 | Full set of Trades | Trade – Cash | Findur | Warehouse |

| 41 | Full set of Trades | Trades – Bonds | Findur | Warehouse |

| 42 | Full set of Trades | Trades – Futures | Findur | Warehouse |

| 43 | Full set of Trades | Trades – FX | Findur | Warehouse |

| 44 | Full set of Trades | Trades – Interest rate swaps | Findur | Warehouse |

| 45 | Full set of Trades | Trades – Loans & Deposits | Findur | Warehouse |

| 46 | Full set of Trades | Trades – Repo Collateral | Findur | Warehouse |

| 47 | Full set of Trades | Trades – Repurchase Agreement | Findur | Warehouse |

| 48 | Full set of Trades | Tran Info – additional trade data | Findur | Warehouse |

| 49 | GL Entries | Account Journal Balances | Findur | Warehouse |

| 50 | GL Journals | Account Journal Entries | Findur | Warehouse |

| 51 | Performance Measurement | Instrument Level Results (AUD) | Findur | Warehouse |

| 52 | Performance Measurement | Instrument Level Results (Trade Currency) | Findur | Warehouse |

| 53 | Performance Measurement | Performance Attrribution Results | Findur | Warehouse |

| 54 | Performance Measurement | Portfolio Level Returns (AUD) | Findur | Warehouse |

| 55 | Performance Measurement | Portfolio Level Returns (Trade Currency) | Findur | Warehouse |

| 56 | Performance Measurement | Security Definition | Findur | Warehouse |

| 57 | Performance Metrics | PNL Explain | Findur | Warehouse |

| 58 | Profit & Loss Data | PNL Details | Findur | Warehouse |

| 59 | Risk / Var | Aggregated VaR Result | Findur | Warehouse |

| 60 | Risk / Var | PNL Vectors | Findur | Warehouse |

| 61 | Risk Data | Tran Gpt Delta By Leg | Findur | Warehouse |

| 62 | Security Data | Security Static – Bond Master | Findur | Warehouse |

| 63 | Security Data | Security Static – Futures Master | Findur | Warehouse |

| 64 | Security Data | Security Static – Interest details | Findur | Warehouse |

| 65 | Security Data | Security Static – Principal details | Findur | Warehouse |

| 66 | Security Data | Security Static – Repricing details | Findur | Warehouse |

| 67 | Security Data | Security Static Data (also known as Holding Instrument in Findur) | Findur | Warehouse |

| 68 | Static Data | Broker Fee Types | Findur | Warehouse |

| 69 | Stress Scenarios | Credit Spread Stress Scneario | Findur | Warehouse |

| 70 | Stress Scenarios | IR-Basis Stress Scenario | Findur | Warehouse |

| 71 | Trade Settlements | Trade settlement event extract | Findur | Warehouse |

| 72 | Transaction Fees | Full transaction fee extract | Findur | Warehouse |

Extracting Report Structure – Creating Data Definition

It is possible to generate the report definition by extracting the report structure from the Openlink tables directly. I found this useful as it provides a simple way to create an accurate and up to date interface specification document. The following SQL Queries can be used, and example output is shown below each query:

Report / Column Details

| select r.dxr_definition_id defn_id, r.dxr_definition_name report_name, f.dxr_def_srcfld_seqid seq_num, t.dxr_srctbl_name source_table, f.dxr_srctbl_fldname source_field |

| from dxr_definition r, dxr_def_srctbl t, dxr_def_srcfld f |

| where r.dxr_definition_name like ‘<report name prefix> %’ |

| and r.dxr_definition_id = t.dxr_definition_id |

| and r.dxr_definition_ver = t.dxr_definition_ver |

| and t.dxr_definition_id = f.dxr_definition_id |

| and t.dxr_definition_ver = f.dxr_definition_ver |

| and t.dxr_def_srctbl_seqid = f.dxr_def_srctbl_seqid |

| order by r.dxr_definition_name, f.dxr_def_srcfld_seqid |

Example output

| defn_id | report_name | seq_num | source_table | source_field |

20159 | ACCOUNT BAL EXTRACT | 6 | SQL_CN_Nostro_Account_Posn_Hist | account_id |

20159 | ACCOUNT BAL EXTRACT | 7 | SQL_CN_Nostro_Account_Posn_Hist | portfolio_id |

20159 | ACCOUNT BAL EXTRACT | 8 | SQL_CN_Nostro_Account_Posn_Hist | currency_id |

20159 | ACCOUNT BAL EXTRACT | 9 | SQL_CN_Nostro_Account_Posn_Hist | report_date |

20159 | ACCOUNT BAL EXTRACT | 10 | SQL_CN_Nostro_Account_Posn_Hist | position |

Report Field Details

| select r.dxr_definition_name report_name, o.dxr_def_outfld_seqid field_num, |

| o.dxr_outfld_name source_field_name, o.dxr_outfld_title output_col_title, o.dxr_outfld_name output_col_name, t.dxr_outfld_data_type_name data_type, o.dxr_outfld_format format, |

| o.dxr_definition_id defn_id, o.dxr_definition_ver dfn_ver, o.dxr_def_outfld_source source |

| from dxr_definition r, dxr_def_outfld o, dxr_outfld_data_type t |

| where r.dxr_definition_name like ‘<report name prefix> %’ |

| and r.dxr_definition_id = o.dxr_definition_id |

| and r.dxr_definition_ver = o.dxr_definition_ver |

| and o.dxr_outfld_data_type_id = t.dxr_outfld_data_type_id |

| order by r.dxr_definition_name, o.dxr_def_outfld_seqid |

Example Output

| report_name | ACCOUNT BAL EXTRACT |

| field_num | 1 |

| source_field_name | account_id |

| output_col_title | account_id |

| output_col_name | account_id |

| data_type | Integer |

| format | ColumnValue |

| defn_id | 20159 |

| dfn_ver | 6 |

| source | #6 |

Example Plug-in module

The following section shows a cut-down version of an OpenComponents module used to extract simulation results:

The plugins are defined as AbstractGenericScripts

public

class

RB_PLUGIN_TranLegSimResult : Olf.Embedded.Generic.AbstractGenericScript

The entry point is therefore the execute function:

public

override Olf.Openrisk.Table.Table Execute(Session session, ConstTable argt)

Create an output table with the appropriate column structure:

Table tblOutput = CreateOutputTable(session);

Then process the report parameters – note a query result table is useed which is not the standard query_result, and the name is passed:

// see if ReportBuilder report parameters is passed

if (argt.GetColumnId(“PluginParameters”) > 0)

Table rbParamsTbl = argt.GetTable(“PluginParameters”, 0);

//session.Debug.ViewTable(rbParamsTbl);

iQueryId = argt.GetInt(“QueryResultID”, 0);

sQueryResultTable = argt.GetString(“QueryResultTable”, 0);

returnTbl = session.TableFactory.CreateTable(“returnt”);

if (iQueryId > 0)

{

// find the reval type flag

int iParamRow = rbParamsTbl.Find(0, “REVAL_ADHOC”, 0);

if (iParamRow >= 0)

bRunTypeIntraDay = rbParamsTbl.GetString(1, iParamRow).Equals(“1”);

Create Output table:

private

Table CreateOutputTable(Session session)

{

Table tblOutput = session.TableFactory.CreateTable(“tran leg sim”);

tblOutput.AddColumn(“deal_num”, EnumColType.Int);

tblOutput.AddColumn(“ins_num”, EnumColType.Int);

.. etc

}

Then load the data:

// load the deal list

String sSQL = “select a.tran_num, a.deal_tracking_num deal_num, a.internal_portfolio, a.toolset, isnull(p.cflow_type, 0) as cflow_type\n” +

“from “ + sQueryResultTable +

” q, ab_tran a left outer join parameter p on p.ins_num = a.ins_num and p.param_seq_num = 0\n” +

“where a.tran_num = q.query_result\n” +

“and q.unique_id = “ + iQueryId;

Table tblDealList = session.IOFactory.RunSQL(sSQL);

// create a portfolio list to iterate over and load results from EIOD sim or run reval

Table tblPtfList = session.TableFactory.CreateTable();

tblPtfList.SelectDistinct(tblDealList, “internal_portfolio”, “[IN.tran_num] > 0”);

for (int i = 0; i < tblPtfList.RowCount; i++)

{

int iPtfNum = tblPtfList.GetInt(0, i);

Portfolio ptf = session.StaticDataFactory.GetReferenceObject<Portfolio>(iPtfNum);

Using (Table tblDeals = session.TableFactory.CreateTable())

{

tblDeals.Select(tblDealList, “tran_num, deal_num, toolset, cflow_type”, “[IN.internal_portfolio] == “ + iPtfNum);

QueryResult qr = session.IOFactory.CreateQueryResult();

qr.Add(tblDeals.GetColumnValuesAsInt(“tran_num”));

if (bRunTypeIntraDay)

RunSimForPortfolio( );

else

LoadResultForPortfolio();

qr.Clear();

}

}

private

void RunSimForPortfolio(Session session, QueryResult qr, Portfolio ptf, Table tblTranSimResults, Table tblDeals, String logFile)

{

Using (Simulation sim = session.SimulationFactory.CreateSimulation(“Report Sim”))

{

sim.UseMarketPrices = false;

sim.SetRevalType(EnumSimulationRunType.IntraDay);

Scenario scen1 = session.SimulationFactory.CreateScenario(“Base”);

// set base currency

Currency ccy = session.StaticDataFactory.GetReferenceObject<Currency>(<base ccy>);

scen1.Currency = ccy;

// create result types

ResultTypes res1 = session.SimulationFactory.CreateResultTypes();

res1.Add(EnumResultType.Mtm)

.. add all results

// Add results to scenario

scen1.ResultTypes = res1;

sim.AddScenario(scen1);

SimResults simRes = sim.Run(qr);

RevalResults scenResults = simRes.GetScenarioResults(1);

RevalType EODSimRevalType = session.SimulationFactory.GetRevalType(EnumSimulationRunType.Eod);

UnpackResults(session, qr, ptf, EODSimRevalType, scenResults, tblTranSimResults, tblDeals, logFile);

sim.Dispose();

}

// Load EOD results for portfolio

private

void LoadResultForPortfolio(Session session, RevalType SimRevalType, QueryResult qr, Portfolio portfolio, Table tblTranSimResults, Table tblDeals, String logFile)

{

// Set the retrieval parameters

bool cacheResults = false;

// Retrieve the results

Using (SimResults simResults = session.SimulationFactory.RetrieveSimulationResults(portfolio, SimRevalType, session.Market.CurrentDate, cacheResults))

{

if (simResults == null)

throw

new

Exception(“No EOD Sim Results for portfolio:” + portfolio.Name);

RevalResults scenResults = simResults.GetScenarioResults(1);

UnpackResults();

}

}

// Extract a unique set of index rates / prices from all sim results

private

void UnpackResults(Session session, QueryResult qr, Portfolio portfolio, RevalType SimRevalType, RevalResults scenResults, Table tblTranSimResultsAll, Table tblDeals, String logFile)

{

// create any user defined results needed

ResultType resultPremiumDiscount = session.SimulationFactory.GetResultType(UDSR_PREMIUM_DISCOUNT);

Table tblTranSimResults = tblTranSimResultsAll.CloneStructure();

ConstTable tblSimTranResults = ScenResults.GetResultClassTable(EnumResultClass.Tran);

// extract the results

String sWhat = “deal_num, ins_num, deal_leg, disc_idx, proj_idx, currency_id,” +

(int)EnumResultType.Mtm + “->mtm,” +

(int)EnumResultType.BaseMtm + “->base_mtm,” +

Etc .. for all relevant fields

tblTranSimResults.Select(tblSimTranResults, sWhat, “[IN.deal_num] > 0”);

}